Echoshot AI: Multi-Shot Portrait Video Generation

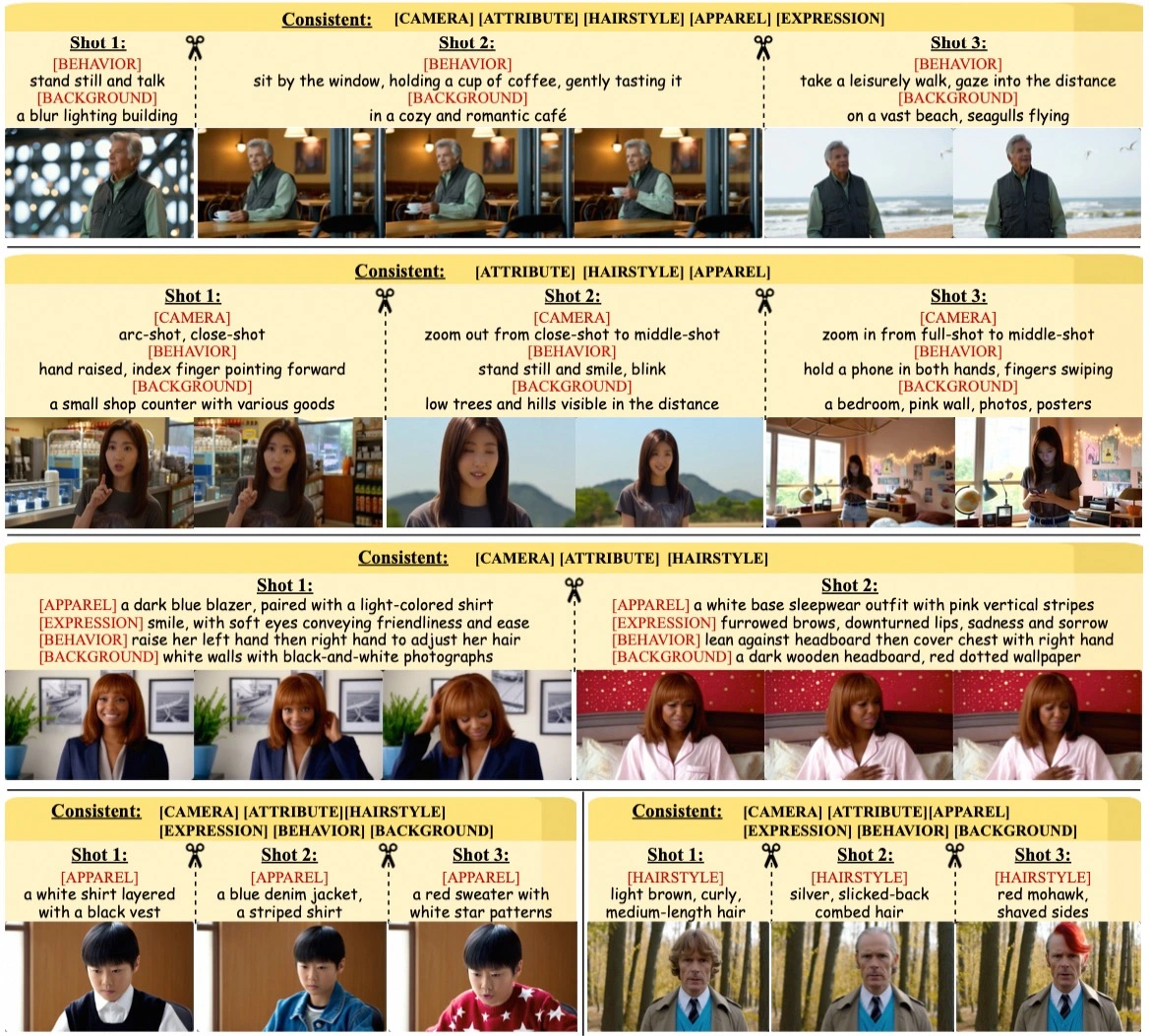

A native and scalable multi-shot framework for portrait customization built upon a foundation video diffusion model. Create consistent multi-shot portrait videos with identity preservation and flexible content controllability.

github.com/D2I-ai/EchoShot

github.com/D2I-ai/EchoShotWhat is Echoshot AI?

Echoshot AI represents a significant advancement in portrait video generation technology. This innovative framework addresses the limitations of traditional single-shot creation methods by enabling the generation of multiple video shots featuring the same person with remarkable identity consistency and content controllability.

Built upon a foundation video diffusion model, Echoshot AI introduces shot-aware position embedding mechanisms within its video diffusion transformer architecture. This design enables the system to model inter-shot variations effectively while establishing intricate correspondence between multi-shot visual content and their textual descriptions.

The framework trains directly on multi-shot video data without introducing additional computational overhead, making it both efficient and scalable for real-world applications in creative content production.

Key Capabilities

- Multi-shot portrait video generation with identity consistency

- Attribute-level controllability for personalized content

- Reference image-based personalized generation

- Long video synthesis with infinite shot counts

Overview of Echoshot AI

| Feature | Description |

|---|---|

| AI Technology | Echoshot AI |

| Category | Multi-Shot Portrait Video Generation |

| Primary Function | Identity-Consistent Video Creation |

| Model Version | EchoShot-1.3B-preview |

| Base Framework | Video Diffusion Transformer |

| Research Paper | arxiv.org/abs/2506.15838 |

| GitHub Repository | D2I-ai/EchoShot |

| Model Hub | HuggingFace: JonneyWang/EchoShot |

Technical Architecture

Shot-Aware Position Embedding

The core innovation of Echoshot AI lies in its shot-aware position embedding mechanisms. These embeddings are integrated within the video diffusion transformer architecture to model inter-shot variations effectively.

This design enables the system to establish intricate correspondence between multi-shot visual content and their textual descriptions, ensuring coherent narrative flow across different shots.

PortraitGala Dataset

Echoshot AI training is facilitated by PortraitGala, a large-scale and high-fidelity human-centric video dataset featuring cross-shot identity consistency.

The dataset includes fine-grained captions covering facial attributes, outfits, and dynamic motions, providing comprehensive training data for multi-shot video modeling.

Key Features of Echoshot AI

Multi-Shot Video Generation

Generate multiple video shots featuring the same person with consistent identity preservation. The system maintains character appearance, facial features, and personal characteristics across different shots and scenes.

Identity Consistency

Advanced identity preservation algorithms ensure that the same person appears consistent across all generated shots, maintaining facial features, expressions, and unique characteristics throughout the video sequence.

Flexible Content Control

Fine-grained control over various aspects including facial attributes, clothing, poses, and environmental settings. Users can specify detailed requirements for each shot while maintaining overall coherence.

Text-to-Video Generation

Transform textual descriptions into high-quality video content. The system interprets detailed prompts to generate corresponding visual content with remarkable accuracy and creativity.

Reference Image Integration

Use reference images to guide the generation process, ensuring that generated content aligns with specific visual requirements or maintains consistency with existing material.

Scalable Architecture

Built on efficient transformer architecture that scales to handle long video sequences with infinite shot counts without compromising quality or performance.

Installation and Setup Guide

Step 1: Download Code Repository

git clone https://github.com/D2I-ai/EchoShot

cd EchoShotStep 2: Create Environment

conda create -n echoshot python=3.10

conda activate echoshot

pip install -r requirements.txtStep 3: Download Models

pip install "huggingface_hub[cli]"

huggingface-cli download Wan-AI/Wan2.1-T2V-1.3B --local-dir .models/Wan2.1-T2V-1.3B

huggingface-cli download JonneyWang/EchoShot --local-dir ./models/EchoShotUsage Examples

Basic Video Generation

Create multi-shot portrait videos by providing textual descriptions. The system generates consistent character appearances across multiple shots while following the narrative described in your prompts.

bash generate.shTraining Custom Models

Train your own version of the model using custom datasets. Prepare video files with corresponding JSON annotations and configure training parameters according to your specific requirements.

bash train.shLLM Prompt Extension

For optimal performance, use LLM-based prompt extension to enhance the quality and coherence of generated content. Configure Dashscope API for enhanced prompt processing.

Set environment variables: DASH_API_KEY and DASH_API_URL for international users.

Applications and Use Cases

Content Creation

Professional video production for marketing, advertising, and entertainment content with consistent character representation across multiple scenes.

Educational Media

Create engaging educational videos with consistent instructor or character appearances across different lesson segments and topics.

Virtual Presentations

Generate professional presentation videos with consistent speaker identity across multiple sections and topics.

Social Media Content

Produce consistent personal branding content across multiple social media platforms and campaign segments.

Film and Animation

Support film production and animation studios with consistent character generation for different scenes and sequences.

Research and Development

Academic and industrial research applications in computer vision, machine learning, and video generation technologies.

Advantages and Limitations

Advantages

- Superior identity consistency across multiple shots

- Attribute-level controllability for fine-grained customization

- Scalable architecture supporting infinite shot counts

- Direct training on multi-shot data without computational overhead

- High-quality output with professional-grade results

- Flexible reference image integration capabilities

Limitations

- Requires substantial computational resources for training

- Performance depends on quality of training data

- Complex setup process for initial installation

- Limited to portrait and human-centric video generation

- Requires technical expertise for optimal configuration

Research Background

Echoshot AI emerges from comprehensive research addressing the limitations of existing video diffusion models. Traditional approaches were primarily constrained to single-shot creation, while real-world applications demand multiple shots with identity consistency and flexible content controllability.

The research team developed a novel shot-aware position embedding mechanism that enables direct training on multi-shot video data. This approach eliminates the need for additional computational overhead while maintaining high-quality output standards.

The PortraitGala dataset, specifically constructed for this research, features large-scale, high-fidelity human-centric video content with cross-shot identity consistency and fine-grained captions covering facial attributes, outfits, and dynamic motions.

Key Research Contributions

- Native multi-shot framework design

- Shot-aware position embedding mechanisms

- Large-scale human-centric video dataset

- Identity-consistent video generation algorithms

Latest Updates

July 15, 2025

EchoShot-1.3B-preview is now available on HuggingFace for public access and experimentation.

July 15, 2025

Official inference and training codes released at D2I-ai GitHub repository.

May 25, 2025

Initial research proposal and framework development for EchoShot multi-shot portrait video generation model.